I have asked myself this question a lot in the last couple of years. Every few months I take some time to seriously reflect upon my skills, and recently, not so seriously, when adding hashtags to social media posts.

What am I today?

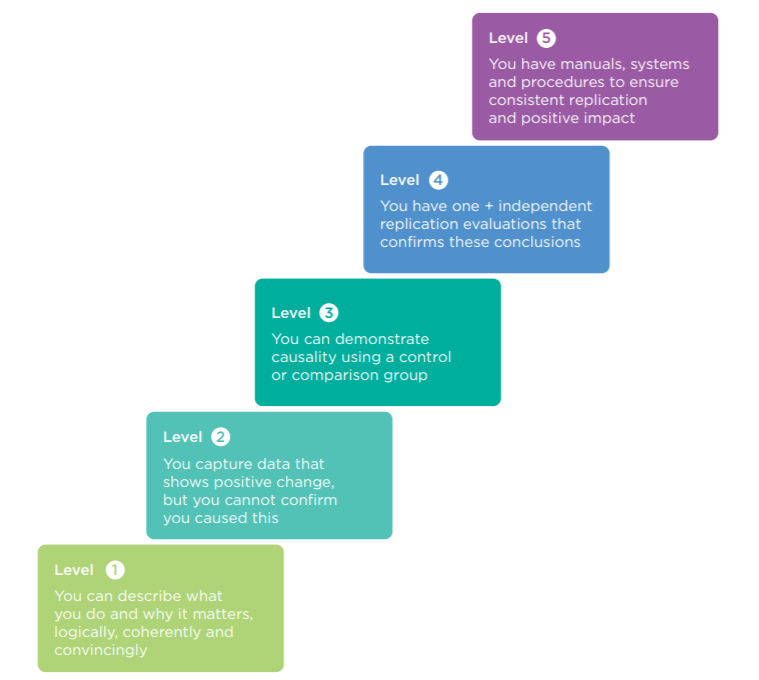

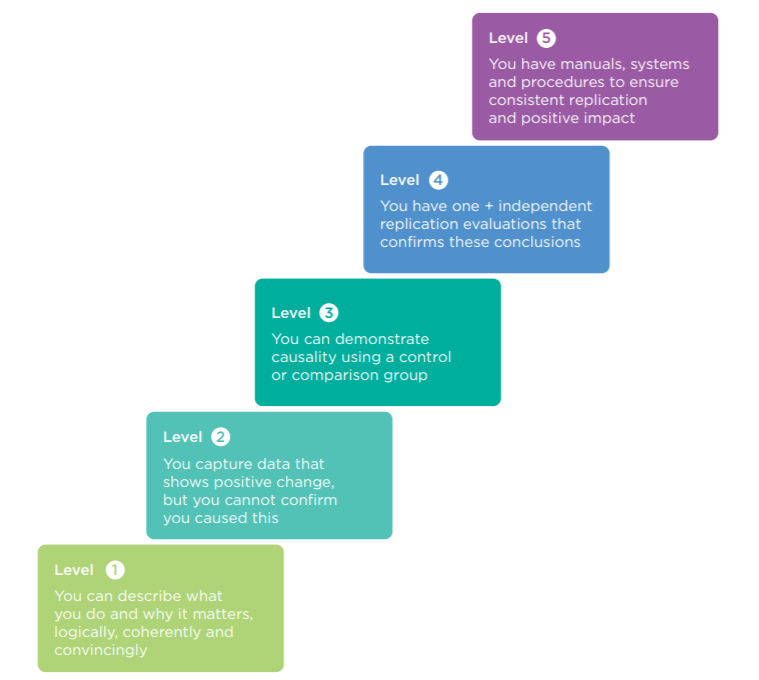

I am in the measuring impact camp today and to be honest, it is where I would sit on a scale between impact measurement and academic evaluation, if judged by my peers. I have been to numerous training courses and conferences on both evaluation and impact measurement. I have lead large-scale evaluations utilising rigorous, academically accepted research methods, but still never got beyond a level 2 on NESTA’s standards of evidence. By the way, there are 5 levels and getting to level 3 requires a control or comparison group!

Moment of Clarity

I first started asking myself this question, of how I define my skills, after attending the ANZEA evaluation conference in New Zealand in 2016. I was both an attendee and a presenter at the conference. Over the two days, I attended many sessions where I started to think I was a fraud and had no right to be presenting. There were people who had created whole new approaches to evaluation and spoke in a whole new conceptual and theoretical language that, to be honest, I didn’t have or understand!

But then as I left the Te Papa museum in Wellington, I had a realisation that my skills were in creating ways of measuring impact that are realistic, achievable, cost-effective and practical in the real world. There is a space for both the academic evaluator and the more practical impact measurer.

Looking into the hourglass

John Lavelle developed a picture of an hourglass, with research on one side and evaluation on the other. This picture illustrates how evaluation and research begin at different points and funnel to using the same methods to answer questions and analyse data, then diverge again when it comes time to report on the outcomes (or processes) under study.

As you can see from the diagram, the key driver for evaluation is to have information that can inform decision making. So that makes me an evaluator and some of those ANZEA presenters are actually researchers.

Ask yourself three questions

Whether you are measuring impact, evaluating or carrying out research will depend on how you answer these three questions:

- Who is my audience?

- What is the purpose?

- What is the level of rigour required?

These questions originate from the Social Return on Investment (SROI) methodology and each question leads you to define in detail your goals in evaluating or measuring impact. All the questions are inter-related, and the answers depend on, and change, based on the answers to the other two.

Does it matter?

In the end what really matters is that you are making informed decisions based on the data collected and in the reports you are sharing with your stakeholders. That the data you are using is robust enough for the type of decisions it is informing, and you are using a common methodology for all of your programs.

The language you, or I, use to describe how we get the data that informs decision making doesn’t really matter!

Andrew Callaghan is the ASVB Impact Specialist and writes about his experiences in measuring impact and social value.